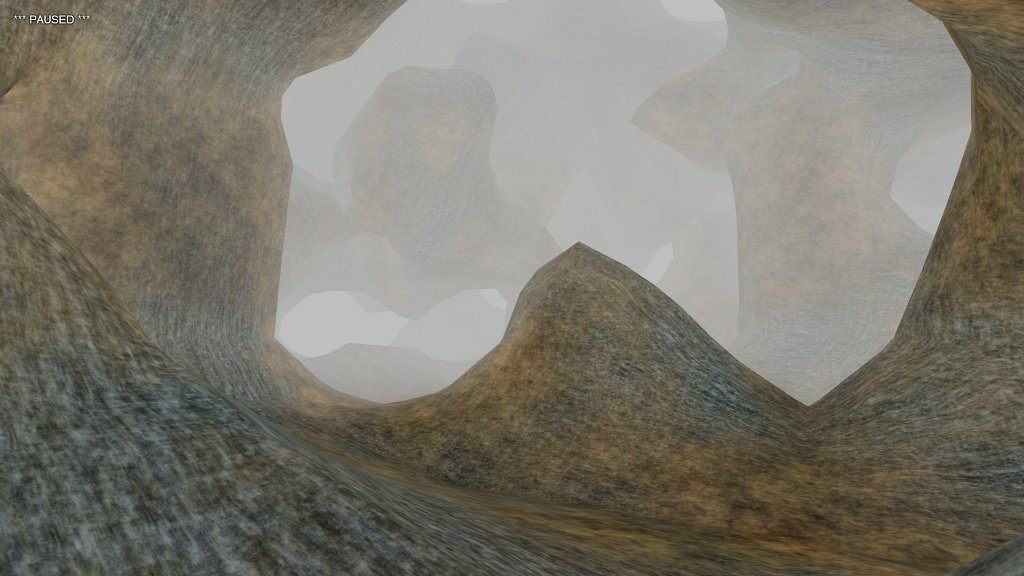

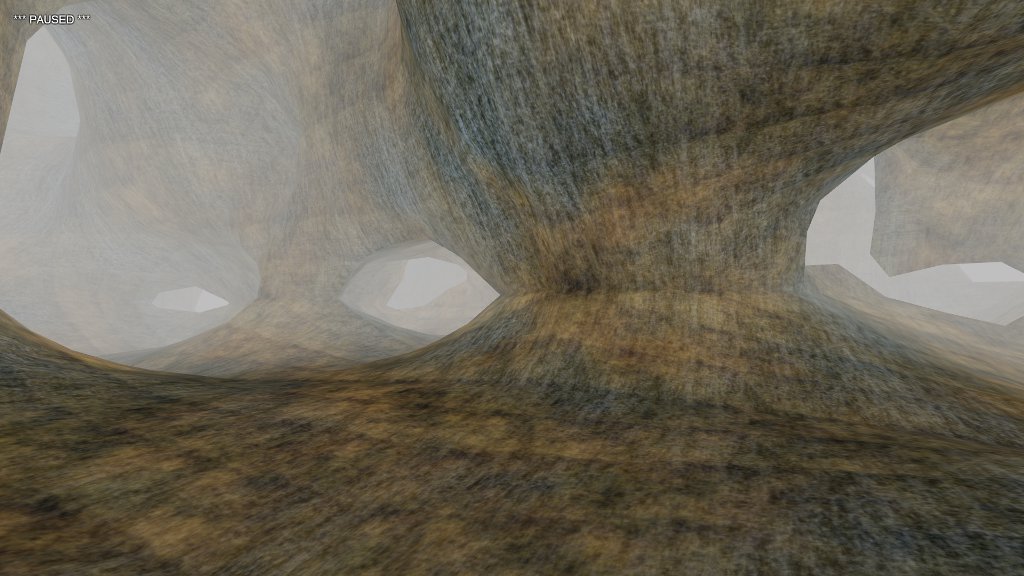

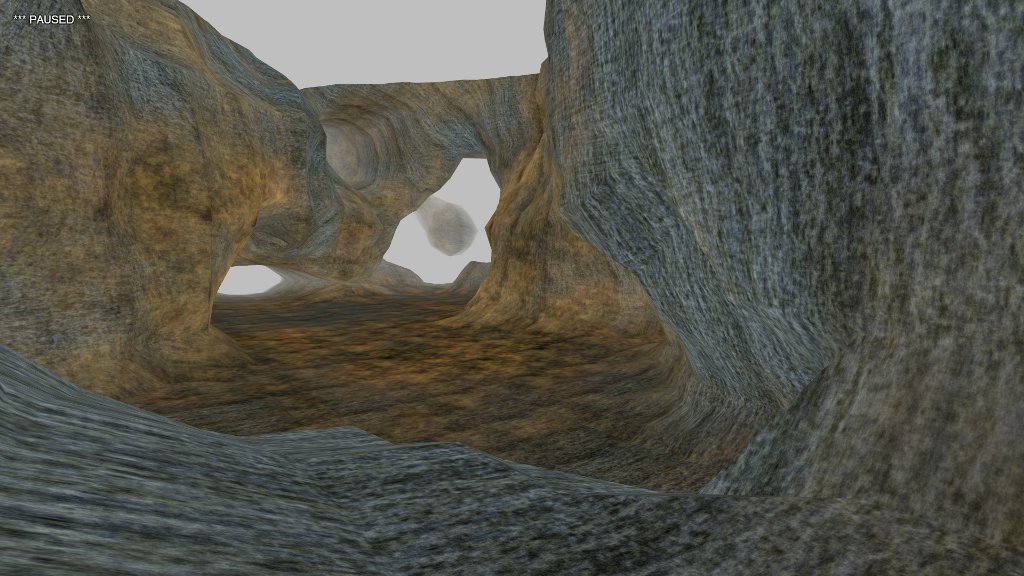

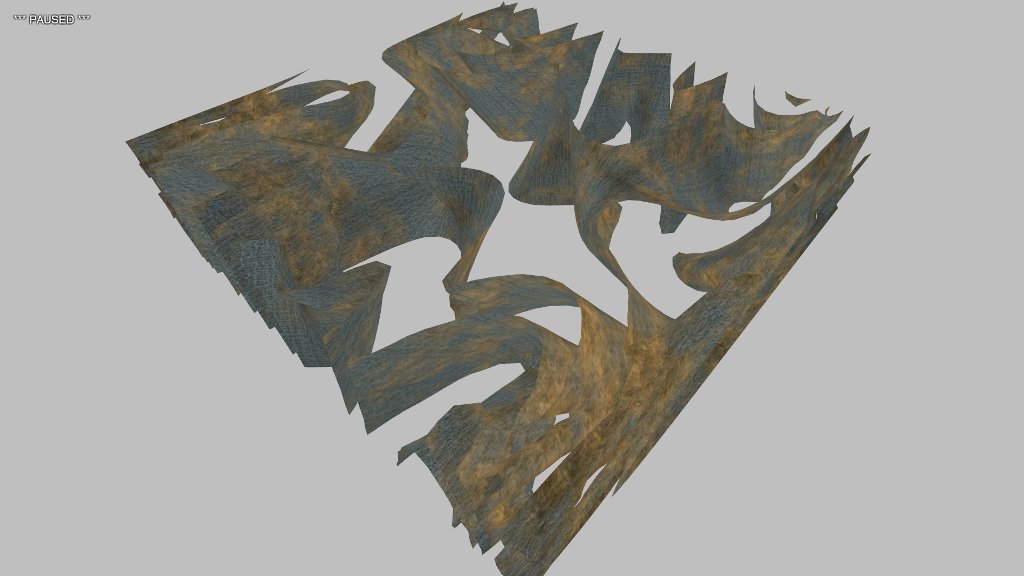

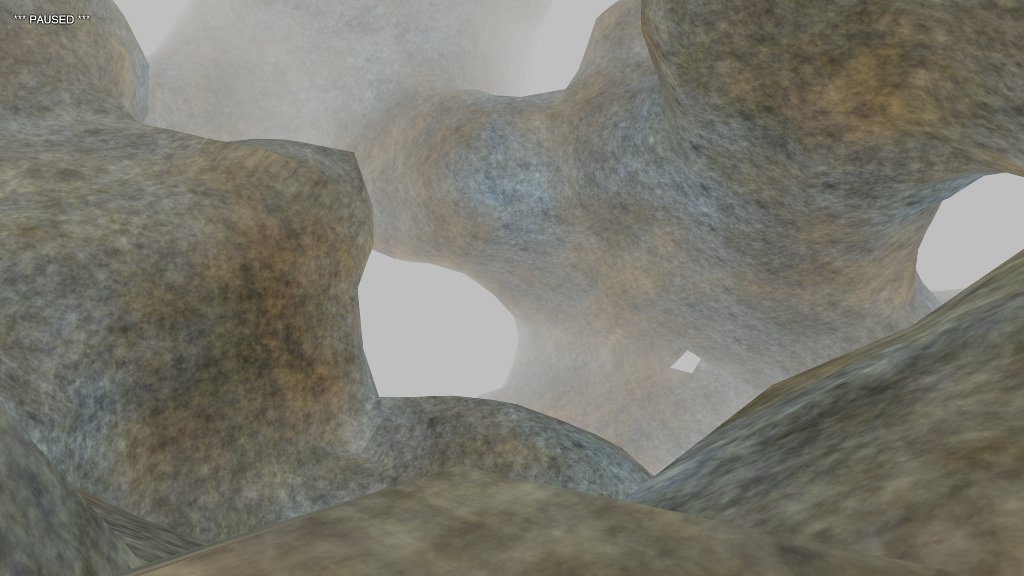

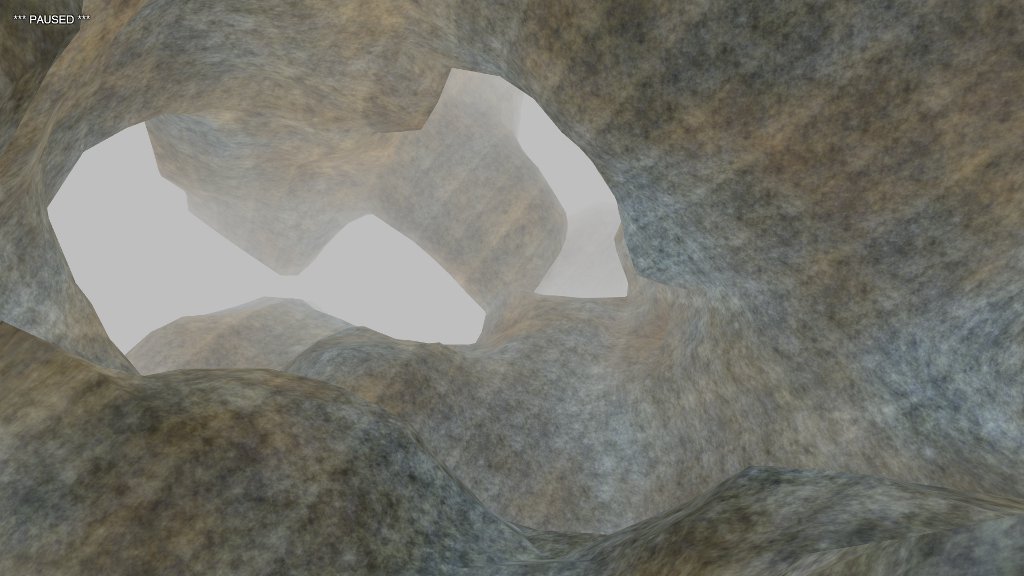

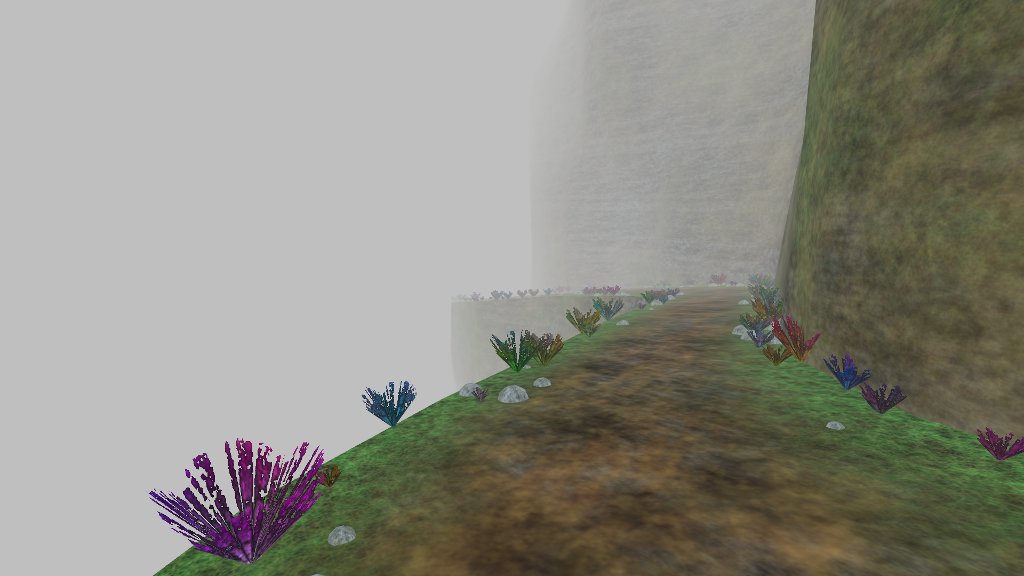

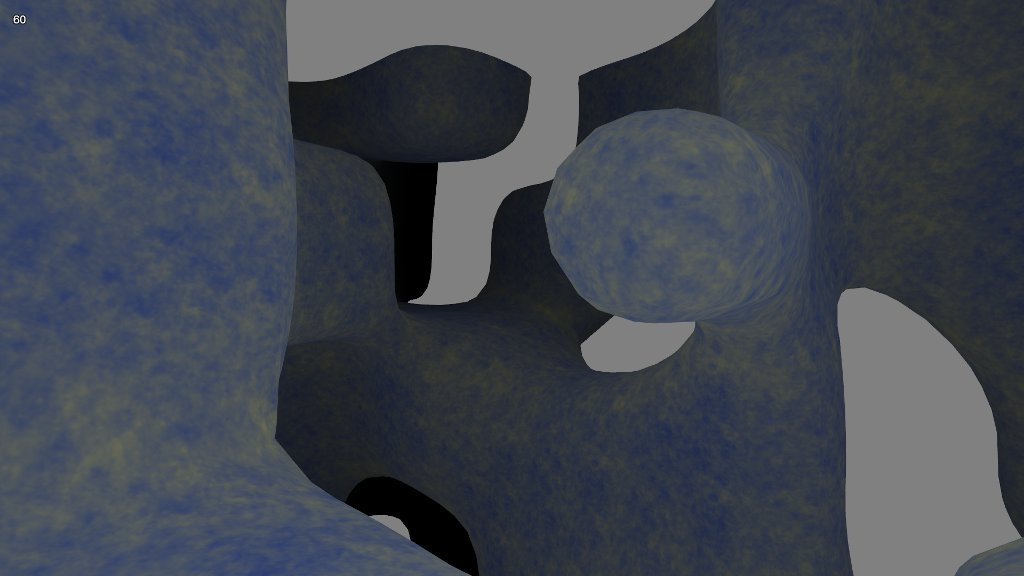

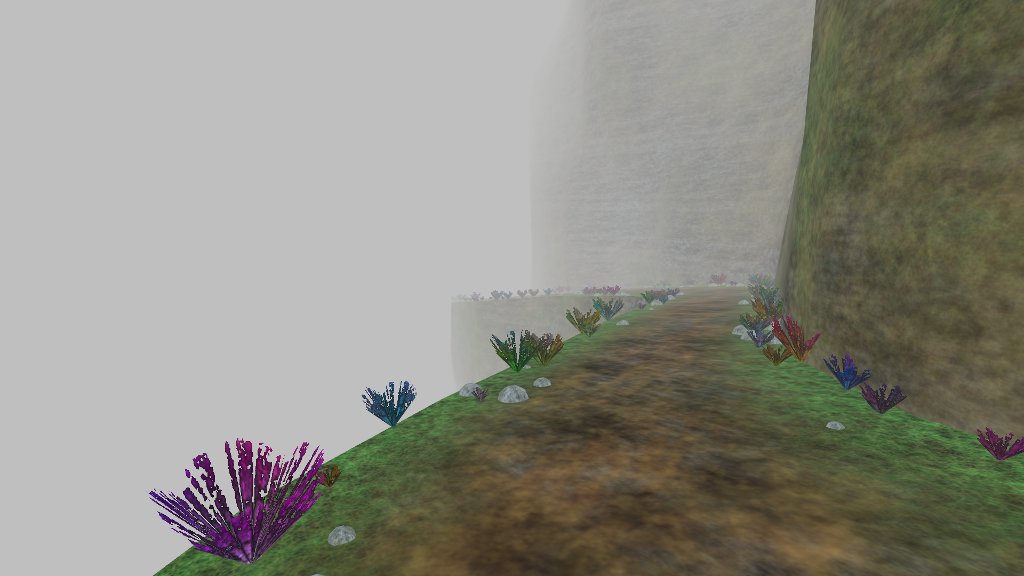

Ever since Web Workers arrived, I’ve been thinking about how to use them in my own stuff. I’ve been developing a game that involves a procedurally generated cliff path and I want that path, like all good things, to go on forever.

(Want to see it in action? Try the Live Demo. WSAD moves, Q for fullscreen.)

I update the scene every time the player moves a set distance from the last update. If I do this within an animation frame, I’m going to get a drop in frame rate, and possibly a visible skip in the game experience. That’s an immersion killer. Workers to the rescue!

Well, not so fast.

The GL context itself can’t be used in a worker thread, as it’s derived from a DOM object. I can’t update my display meshes directly, and I can’t send them back and forth—function members are stripped out when sending objects between threads. Only data is allowed. So: data it is. Generate updates in the worker thread, and hand the results over to the main thread for display. (I was worried that objects would be serialized JSON-style across thread boundaries, but Chrome and Firefox perform a higher-performance binary copy of the data instead.)

Creating a thread is simple enough.

T.worker = new Worker("thread.js");

T.worker.onmessage = function(e) {

T.handle(e);

};

T.worker.onerror = function(e) {

console.log("worker thread error: " +

e.message + " at line " +

e.lineno + " in file " +

e.filename);

};

Sadly, that error handler is all the debugging support you’re likely to get. Because the worker thread is running inside another page context, you can’t set a breakpoint in it. Back to the heady days of printf debugging! Ah, memories.

After creating the thread, I want to tell it to construct whatever objects it needs to fulfill my requests—in this case, the objects that describe the cliff path surfaces. There’s a problem, however, as I also need those objects in the main thread to handle collisions. I can’t share the surface objects directly as they contain function members. So, I create the surface maps, which are data-only, and ship those across.

// generate road and cliff surface maps

this.road.map = SOAR.space.make(128);

SOAR.pattern.randomize(this.road.map, T.seed(), 0, 1);

this.road.height = SOAR.space.makeLine(this.road.map, 6, 0.05);

this.cliff.map = SOAR.space.make(128, 128);

SOAR.pattern.walk(this.cliff.map, T.seed(), 8, 0.05, 1, 0.5, 0.5, 0.5, 0.5);

SOAR.pattern.normalize(this.cliff.map, 0, 1);

var surf0 = SOAR.space.makeSurface(this.cliff.map, 10, 0.05, 0.1);

var surf1 = SOAR.space.makeSurface(this.cliff.map, 5, 0.25, 0.5);

var surf2 = SOAR.space.makeSurface(this.cliff.map, 1, 0.75, 1.5);

this.cliff.surface = function(y, z) {

return surf0(y, z) + surf1(y, z) + surf2(y, z);

};

// send the maps to the worker thread and let it initialize

T.worker.postMessage({

cmd: "init",

map: {

road: this.road.map,

cliff: this.cliff.map

},

seed: {

rocks: T.seed(),

brush: T.seed()

}

});

On the thread side, a handler picks up the message and routes it to the necessary function.

this.addEventListener("message", function(e) {

switch(e.data.cmd) {

case "init":

init(e.data);

break;

case "generate":

generate(e.data.pos);

break;

}

}, false);

The initialization function uses the surface maps to build surface objects, plus a set of “dummy” mesh objects.

function init(data) {

// received height map from main thread; make line object

road.height = SOAR.space.makeLine(data.map.road, 6, 0.05);

// generate dummy mesh for vertex and texture coordinates

road.mesh = SOAR.mesh.create(display);

road.mesh.add(0, 3);

road.mesh.add(0, 2);

// received surface map from main thread; make surface object

var surf0 = SOAR.space.makeSurface(data.map.cliff, 10, 0.05, 0.1);

var surf1 = SOAR.space.makeSurface(data.map.cliff, 5, 0.25, 0.5);

var surf2 = SOAR.space.makeSurface(data.map.cliff, 1, 0.75, 1.5);

cliff.surface = function(y, z) {

return surf0(y, z) + surf1(y, z) + surf2(y, z);

};

cliff.mesh = SOAR.mesh.create(display);

cliff.mesh.add(0, 3);

cliff.mesh.add(0, 2);

// received RNG seed

brush.seed = data.seed.brush;

brush.mesh = SOAR.mesh.create(display);

brush.mesh.add(0, 3);

brush.mesh.add(0, 2);

rocks.seed = data.seed.rocks;

rocks.mesh = SOAR.mesh.create(display);

rocks.mesh.add(0, 3);

rocks.mesh.add(0, 2);

}

Creating these dummy objects might seem strange. Why not just use arrays? Well, the mesh object wraps a vertex array plus an index array, and provides methods to deal with growing these arrays and handling stride and so on. It’s more convenient and means I have to write less code. When I send the mesh object across the thread boundary, I can simply treat it as a data object.

On every frame update, I check the player’s position, and once it’s moved a set distance from the position of the last update, I tell the worker thread to get busy.

update: function() {

SOAR.capture.update();

T.player.update();

// update all detail objects if we've moved far enough

var ppos = T.player.camera.position;

if (T.detailUpdate.distance(ppos) > T.DETAIL_DISTANCE) {

T.worker.postMessage({

cmd: "generate",

pos: ppos

});

T.detailUpdate.copy(ppos);

}

T.draw();

}

The worker handles the request by populating the dummy meshes and sending them to the display thread.

function generate(p) {

// lock the z-coordinate to integer boundaries

p.z = Math.floor(p.z);

// road is modulated xz-planar surface

road.mesh.reset();

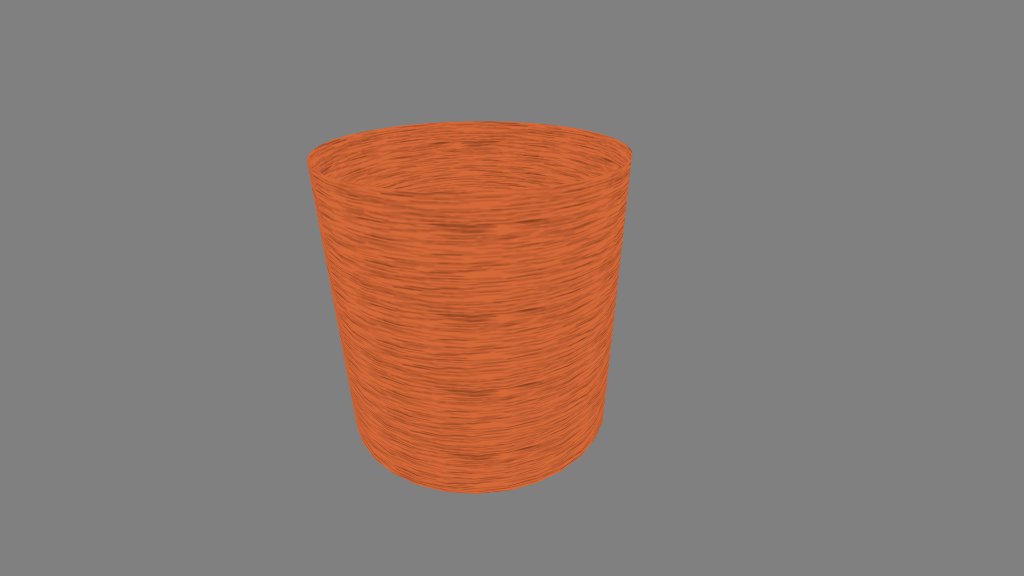

indexMesh(road.mesh, 2, 64, function(xr, zr) {

var z = p.z + (zr - 0.5) * 16;

var y = road.height(z);

var x = cliff.surface(y, z) + (xr - 0.5);

road.mesh.set(x, y, z, xr, z);

}, false);

// cliff is modulated yz-planar surface

// split into section above path and below

cliff.mesh.reset();

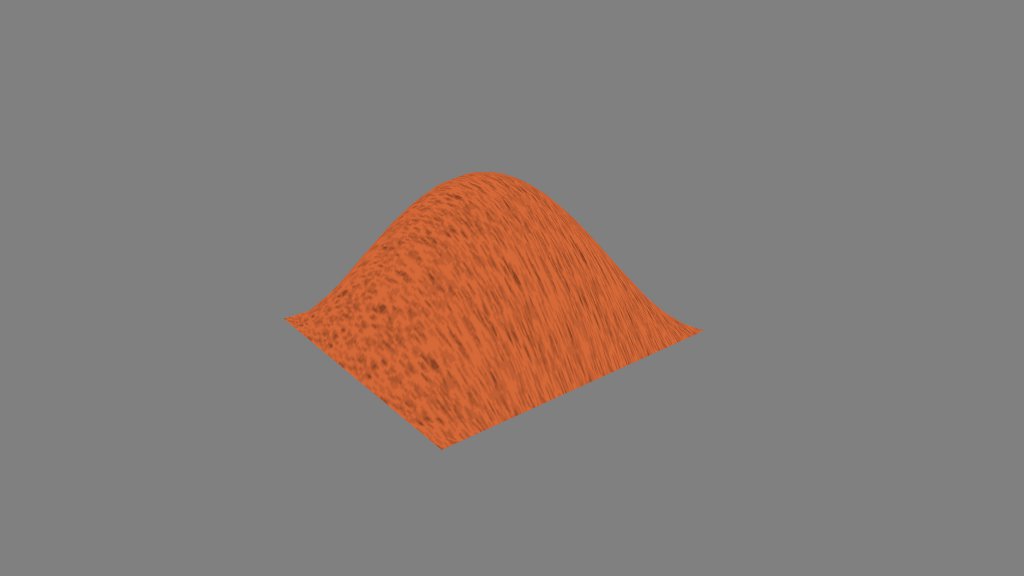

indexMesh(cliff.mesh, 32, 64, function(yr, zr) {

var z = p.z + (zr - 0.5) * 16;

var y = road.height(z) + yr * 8;

var x = cliff.surface(y, z) + 0.5;

cliff.mesh.set(x, y, z, y, z);

}, false);

indexMesh(cliff.mesh, 32, 64, function(yr, zr) {

var z = p.z + (zr - 0.5) * 16;

var y = road.height(z) - yr * 8;

var x = cliff.surface(y, z) - 0.5;

cliff.mesh.set(x, y, z, y, z);

}, true);

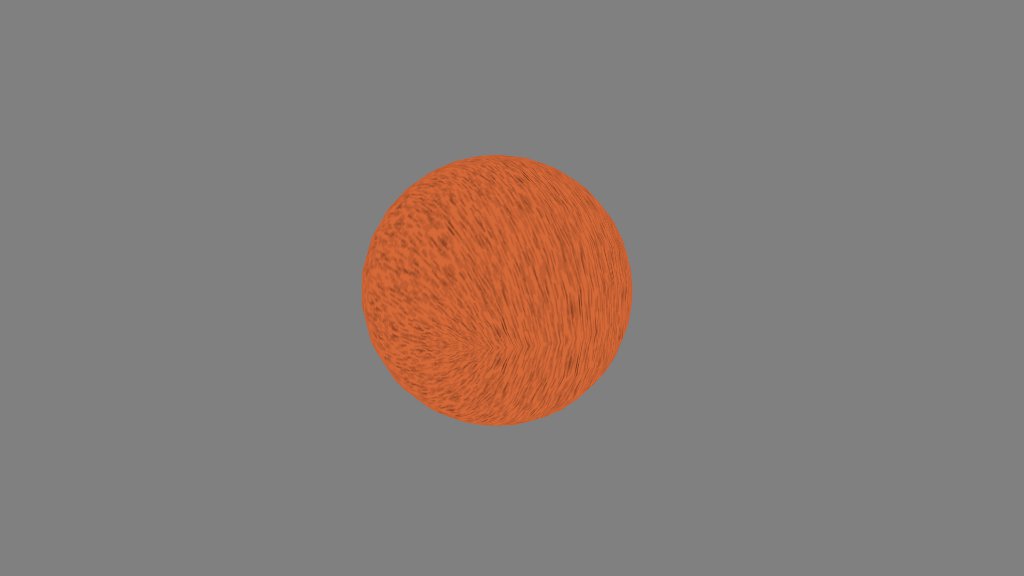

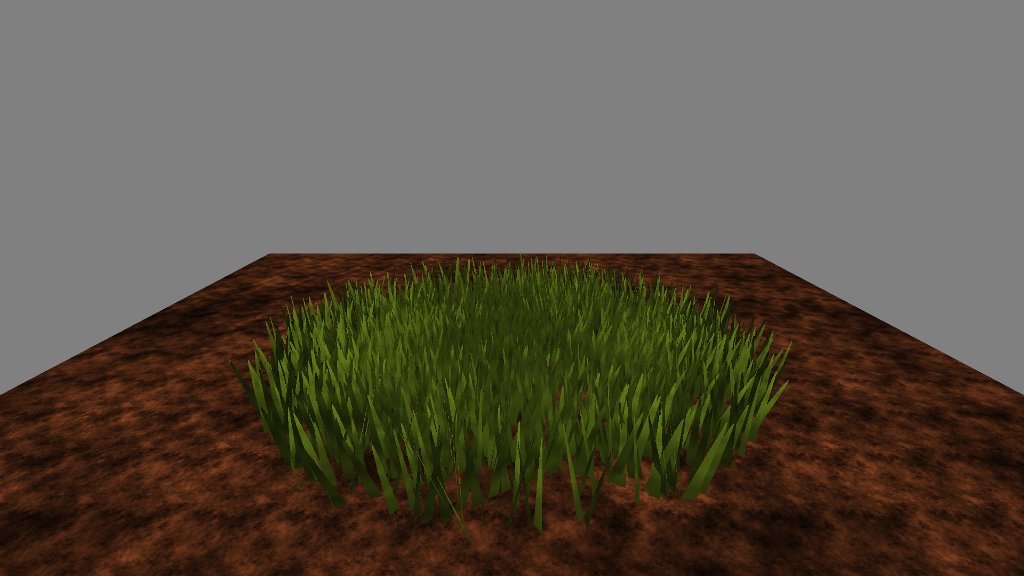

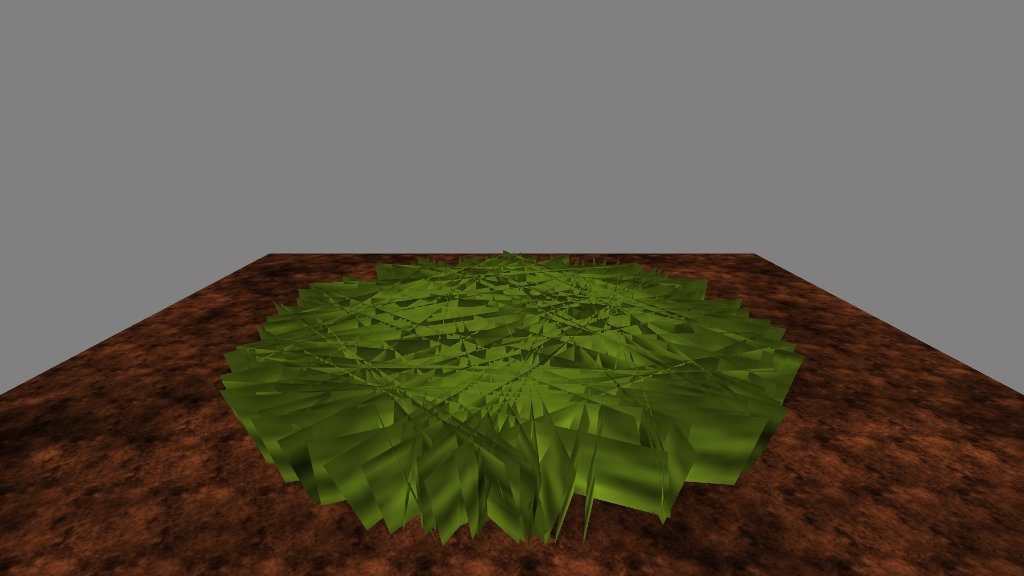

// brush and rocks are generated in "cells"

// cells occur on integral z boundaries (z = 0, 1, 2, ...)

// and are populated using random seeds derived from z-value

brush.mesh.reset();

(function(mesh) {

var i, j, k;

var iz, x, y, z, a, r, s;

// 16 cells because path/cliff is 16 units long in z-direction

for (i = -8; i < 8; i++) {

iz = p.z + i;

// same random seed for each cell

rng.reseed(Math.abs(iz * brush.seed + 1));

// place 25 bits of brush at random positions/sizes

for (j = 0; j < 25; j++) {

s = rng.get() < 0.5 ? -1 : 1;

z = iz + rng.get(0, 1);

y = road.height(z) - 0.0025;

x = cliff.surface(y, z) + s * (0.5 - rng.get(0, 0.15));

r = rng.get(0.01, 0.1);

a = rng.get(0, Math.PI);

// each brush consists of 4 triangles

// rotated around the center point

for (k = 0; k < 4; k++) {

mesh.set(x, y, z, 0, 0);

mesh.set(x + r * Math.cos(a), y + r, z + r * Math.sin(a), -1, 1);

a = a + Math.PI * 0.5;

mesh.set(x + r * Math.cos(a), y + r, z + r * Math.sin(a), 1, 1);

}

}

}

})(brush.mesh);

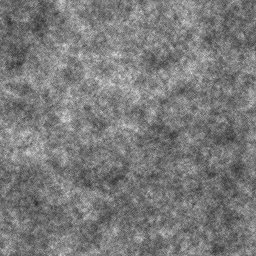

rocks.mesh.reset();

(function(mesh) {

var o = SOAR.vector.create();

var i, j, k;

var iz, x, y, z, r, s;

var tx, ty;

for (i = -8; i < 8; i++) {

iz = p.z + i;

// same random seed for each cell--though not

// the same as the brush, or rocks would overlap!

rng.reseed(Math.abs(iz * rocks.seed + 2));

// twenty rocks per cell

for (j = 0; j < 20; j++) {

s = rng.get() < 0.5 ? -1 : 1;

z = iz + rng.get(0, 1);

y = road.height(z) - 0.005;

x = cliff.surface(y, z) + s * (0.5 - rng.get(0.02, 0.25));

r = rng.get(0.01, 0.03);

tx = rng.get(0, 5);

ty = rng.get(0, 5);

// each rock is an upturned half-sphere

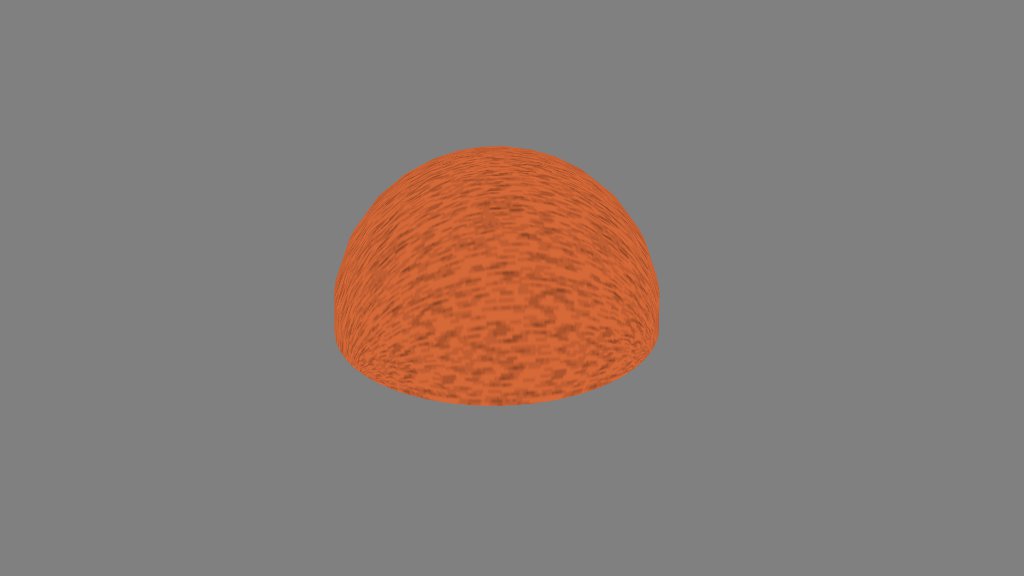

indexMesh(mesh, 6, 6, function(xr, zr) {

o.x = 2 * (xr - 0.5);

o.z = 2 * (zr - 0.5);

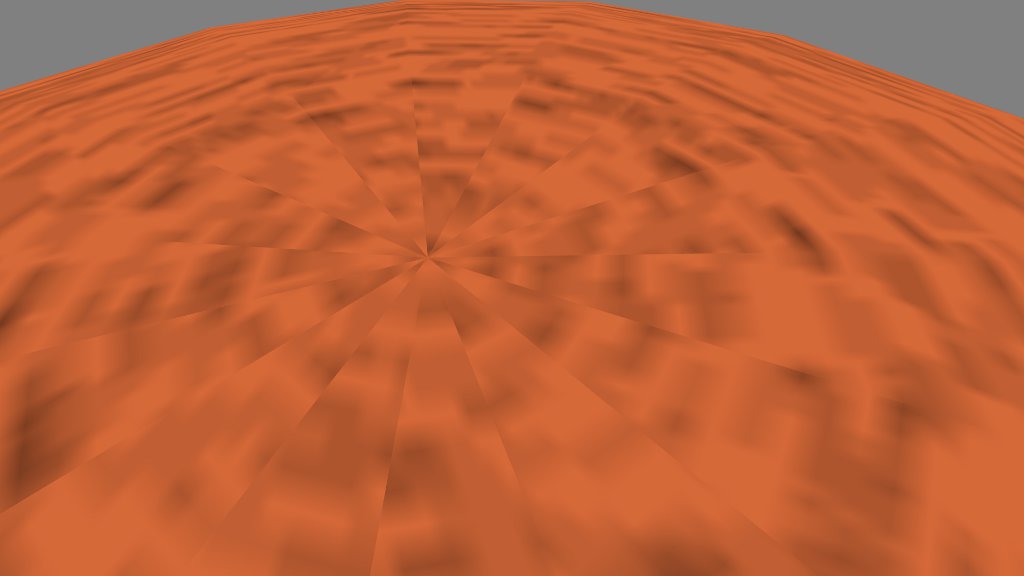

o.y = (1 - o.x * o.x) * (1 - o.z * o.z);

o.norm().mul(r);

mesh.set(x + o.x, y + o.y, z + o.z, xr + tx, zr + ty);

}, false);

}

}

})(rocks.mesh);

// send mesh data back to main UI

postMessage({

cmd: "build-meshes",

cliff: cliff.mesh,

road: road.mesh,

brush: brush.mesh,

rocks: rocks.mesh

});

}

Once it’s received an update, the display thread copies over the mesh data.

copyMesh: function(dest, src) {

dest.load(src.data);

dest.length = src.length;

dest.loadIndex(src.indexData);

dest.indexLength = src.indexLength;

},

build: function(data) {

this.cliff.mesh.reset();

this.copyMesh(this.cliff.mesh, data.cliff);

this.cliff.mesh.build(true);

this.road.mesh.reset();

this.copyMesh(this.road.mesh, data.road);

this.road.mesh.build(true);

this.brush.mesh.reset();

this.copyMesh(this.brush.mesh, data.brush);

this.brush.mesh.build(true);

this.rocks.mesh.reset();

this.copyMesh(this.rocks.mesh, data.rocks);

this.rocks.mesh.build(true);

}

And that’s that. Nice work, worker! Take a few milliseconds vacation time.