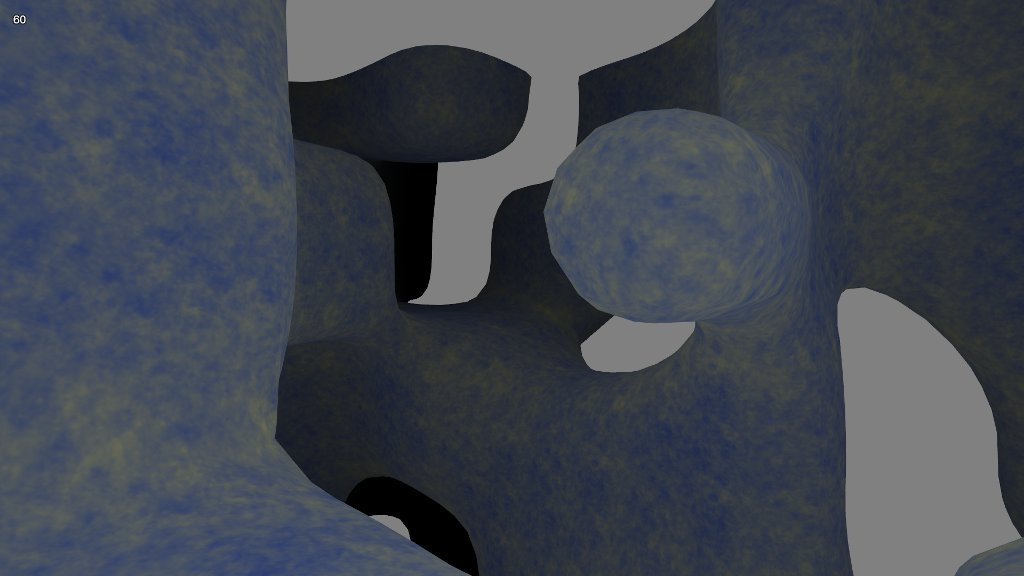

My last post got me onto the subject of 3D textures. In the process of researching the topic, I decided I needed better models to texture. Spheres are cool and all, but I’ll take a 3D noise function any day. It was time to dive into the marching cubes algorithm.

The principle is pretty simple. Take a scalar field—that is, a function that assigns a number to every point in space—and set a threshold value. You now have an isosurface. Every point assigned a number less than that threshold is inside the surface, and every point assigned a greater number is outside.

It’s like a contour plot, except as it’s in three dimensions, it’s a surface rather than a line. The marching cubes algorithm generates the polygons that make up that surface. Neat!

I won’t go into the details of the algorithm itself. It’s fairly well documented at Paul Bourke’s site, where I found the C code to port to Javascript. Instead, I’ll just document a few things I worked out while testing it.

Sadly, I still don’t have a true solution to the 3D texture problem. I tried generating Perlin noise in the shader, using a function like

sin(big-number1 * x) * sin(big-number2 * y) * sin(big-number3 * z)

as a noise source and interpolating across integer boundaries. The results weren’t bad, visually speaking, but my implementation was simply too slow for comfort. Each texture fragment requires eight noise samples and seven interpolations, and with several layers making up each texture surface my midrange video card was dropping frames like mad. Either a hardware noise source or a native GLSL trilinear interpolation instruction would have saved the day. (For that matter, native WebGL support for 3D textures would have helped a bit.)

I also tried Perlin’s more recent simplex noise algorithm, but had the same issues with performance. There appear to be other possible implementations out there, so I may revisit this one.

Ultimately, I applied the solution used in the WebGL Caves demo (which also has a nice marching cubes implementation). We can simulate a 3D texture with a weighted sum of three 2D textures, each tied to a specific plane (xy, yz, and xz). The normals to the surface points are used as weighting factors.

//

// vertex shader

// note assignment of normals to texture coordinates

//

attribute vec3 position;

attribute vec3 normal;

uniform mat4 projector;

uniform mat4 modelview;

varying vec3 obj;

varying vec3 eye;

varying vec3 tex;

void main(void) {

vec4 pos = modelview * vec4(position, 1.0);

gl_Position = projector * pos;

eye = pos.xyz;

obj = position;

tex = abs(normal);

}

//

// fragment shader

// normal components used to blend planar contributions

//

precision mediump float;

uniform sampler2D noise;

varying vec3 obj;

varying vec3 eye;

varying vec3 tex;

void main(void) {

float c0 = tex.z * texture2D(noise, obj.xy).r + tex.x * texture2D(noise, obj.yz).r + tex.y * texture2D(noise, obj.xz).r;

float c1 = tex.z * texture2D(noise, 2.0 * obj.xy).r + tex.x * texture2D(noise, 2.0 * obj.yz).r + tex.y * texture2D(noise, 2.0 * obj.xz).r;

vec3 col = mix(vec3(0.12, 0.22, 0.57), vec3(0.91, 0.83, 0.27), (c0 + c1) * 0.25);

float l = (10.0 - length(eye)) / 10.0;

col = col * l;

gl_FragColor = vec4(col, 1.0);

}

This produces what looks like a continuous texture across the surface, and no one’s the wiser.

Generating the normal vectors across an arbitrary surface can be a chore. Since I had a scalar field function representing the surface, I was able to do it this way.

var n = SOAR.vector.create();

// generates surface from 3D noise function

var noise = SOAR.noise3D.create(1968103401, 1, 32, 0.5);

var field = this.field = function(x, y, z) {

return noise.get(x, y, z);

};

// generates surface normals for texture blending

// (really a gradient function, but as it points

// toward wherever the field is strongest, it will

// always point *away* from the local isosurface)

var gradient = this.gradient = function(n, x, y, z) {

n.x = field(x + step, y, z) - field(x - step, y, z);

n.y = field(x, y + step, z) - field(x, y - step, z);

n.z = field(x, y, z + step) - field(x, y, z - step);

n.norm();

};

I sample the field at the opposite corners of a cube, take the difference, normalize the results, and boo-ya! Instant surface normal. (Or gradient. Not sure it matters, if it works.)

The initial meshes I generated were uncomfortably large. I needed a way to index the mesh, and I couldn’t make any assumptions about the ordering of the vertexes. So, I decided to apply the simplest solution I could think of: store off each vertex, assign it an an index, then apply the index when the vertex is referenced again.

var hash = {};

var indx = 0;

// callback for building surface mesh

function polycb(p) {

gradient(n, p.x, p.y, p.z);

var key = Math.floor(p.x * 100) + "." + Math.floor(p.y * 100) + "." + Math.floor(p.z * 100);

var ent = hash[key];

if (ent !== undefined) {

mesh.index(ent);

} else {

mesh.set(p.x, p.y, p.z, n.x, n.y, n.z);

mesh.index(indx);

hash[key] = indx++;

}

}

This was my first attempt at using a JavaScript associative array to store a large number of keys. I liked its simplicity and performance—it added about 15% to the run time, while cutting the vertex count by a factor of six.

Note that the keys are generated by concatenating scaled integer coordinates. I’d initially used the floating point coordinates, but due to JavaScript’s issues representing decimal numbers, the resulting keys were nearly 30 characters long and took forever to look up. Scaling and truncating the coordinates brought keys down to 6-9 characters, and provided much faster lookup times.

Once I could generate these complex surfaces, I wanted to fly about inside them. I needed a way to detect collisions between the camera and the surface.

// set up the camera direction vector

direct.set(0, 0, 0);

if (motion.movefore) {

direct.add(camera.front);

}

if (motion.moveback) {

direct.sub(camera.front);

}

if (motion.moveleft) {

direct.sub(camera.right);

}

if (motion.moveright) {

direct.add(camera.right);

}

speed = (motion.movefast ? 2 : 1) * SOAR.sinterval;

direct.norm();

// find the camera's next position given the current travel direction

tempps.copy(direct).mul(speed).add(pos);

// check the field value at that position; is it less than the cutoff?

if (BED.mctest.field(tempps.x, tempps.y, tempps.z) < 0.51) {

// get the normal vector near the surface

tempps.add(pos).mul(0.5);

BED.mctest.gradient(normal, tempps.x, tempps.y, tempps.z);

// adding normal to player direction should push us away

direct.add(normal);

// however, the vector sum tends to jitter when we're

// pointed toward the surface so check the dot product

if (direct.dot(normal) < 1) {

// rotating sum around the normal smoothes it a bit

direct.cross(normal).neg().cross(normal);

}

}

direct.mul(speed);

pos.add(direct);

Again, a scalar field function comes in handy. As the surface exists wherever the field’s value is 0.5, I use 0.51 as the collision cutoff. (Setting the cutoff to 0.5 would allow the camera to penetrate the surface.) When I have a field function, I like to handle collisions by taking the vector sum of the camera’s direction and the normal at the collision point. I can add the resulting vector to the camera’s position, which prevents it from moving any closer to the surface.

In this case, motion across the surface caused the camera to jitter as the normal changed, and I had to find a way to stablilize it. Though I’m not certain why, rotating the vector sum around the normal smooths it out.

To see it all in action, go to the Live Demo. WSAD steers. Hit Q for fullscreen.