Humans have a psychological need to decorate their spaces, and our blogs are no exception. Even those of us who prefer a minimalist approach, and loudly demand that the content be the focus aren’t immune to this; I can’t tell you how many tech blogs I’ve read that are just black text on a white page but use code to make the sidebar vibrate. Way to let me focus, dude. wiggle wiggle wiggle wiggle arrgh

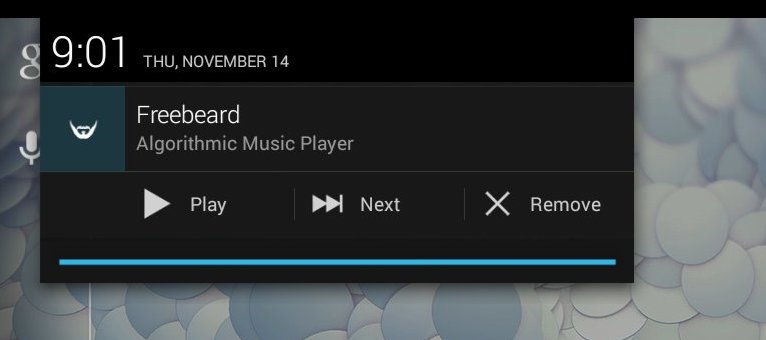

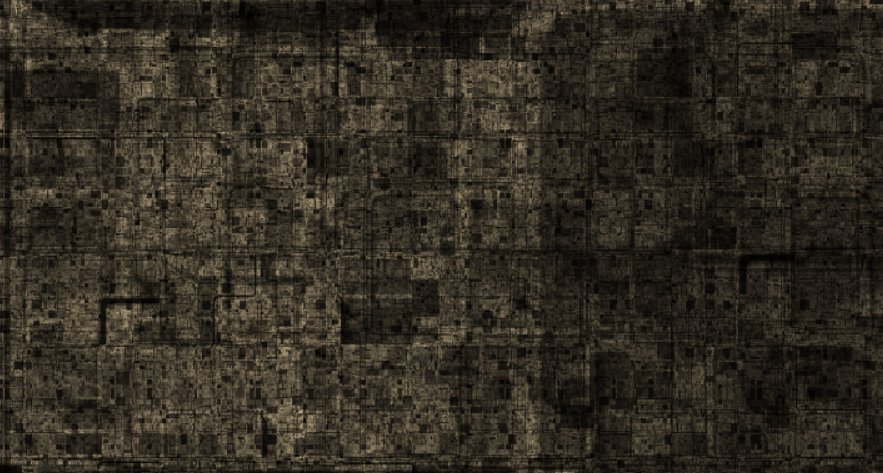

I like a little decoration, and a little dynamism too, so one recent change to the blog is the header image above. It’s a dynamically generated canvas that changes on every page refresh. Grab the code. Here’s a breakdown of how it works.

The whole thing runs inside a self-executing closure to keep its variables private. We generate a random color and its complement, plus a couple of large pseudo-random numbers that we’ll be using for pattern generation.

(function() {

// select color and complement

var color0 = {r: 256 * Math.random(), g: 256 * Math.random(), b: 256 * Math.random() };

var color1 = {r: 256 - color0.r, g: 256 - color0.g, b: 256 - color0.b };

// select pseudorandom coefficients

var a0 = 2234958 * Math.random() + 38402;

var a1 = 7459483 * Math.random() + 80984;

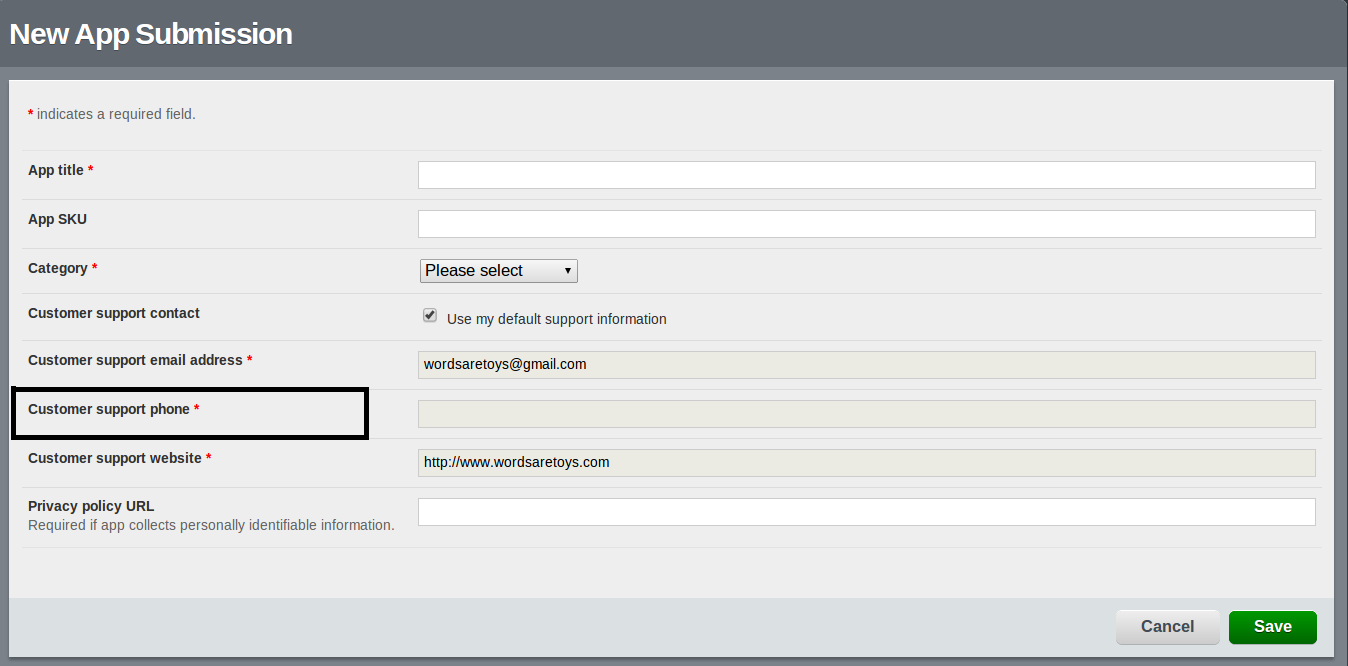

The banner is tailored for WordPress, which gives its header element the masthead id. We want our canvas to have the same height as the masthead. This value shouldn’t change while the page is loaded. However, we can’t guarantee that the width of the masthead won’t change (by resizing or change of orientation on a mobile device), so we pick a maximum width that should cover most scenarios.

// locate wordpress header element

var header = document.getElementById("masthead");

var width = 2048;

var height = header.clientHeight;

We want to shim the canvas into place “under” the header, without disrupting any other elements. I insert it into the DOM just before the page element that contains the header. Absolute positioning allows the canvas to be placed without changing the flow.

// create canvas and draw buffer

var canvas = document.createElement("canvas");

canvas.style.position = "absolute";

canvas.width = document.body.clientWidth;

canvas.height = height;

document.body.insertBefore(canvas, document.getElementById("page"));

We’re going to draw a pattern directly onto an image buffer, so here it is. The set() function draws a single RGB pixel to an XY position in the buffer (at full opacity).

var context = canvas.getContext("2d");

var image = context.createImageData(width, height);

// set a pixel in draw buffer

function set(x, y, r, g, b) {

var i = (Math.floor(x) + Math.floor(y) * image.width) * 4;

image.data[i++] = r;

image.data[i++] = g;

image.data[i++] = b;

image.data[i ] = 255;

}

Things start to get interesting. The header image is a sort of contour plot drawn in two colors, with black lines separating distinct areas. We interpolate RGB values based on the value of k, and the two colors we generated above. The black borders occur when m is near zero. Left alone, the output of this is rather flat and unaesthetic, so I add a little noise to give it a grainy effect.

// set interpolated pixel in draw buffer

function interp(x, y, k, m) {

var r = (1 - k) * color0.r + k * color1.r;

var g = (1 - k) * color0.g + k * color1.g;

var b = (1 - k) * color0.b + k * color1.b;

// borders

r = r * m;

g = g * m;

b = b * m;

// noisify

r = r + (Math.random() * 20 - 10);

g = g + (Math.random() * 20 - 10);

b = b + (Math.random() * 20 - 10);

set(x, y, r, g, b);

}

As Javascript does not include a seedable 2D noise generator, I’ve rolled my own using nested trig functions with very high frequencies. Small changes in X and Y produce large changes in the output, and the trig functions provide a useful output range. The seeds (a0, a1) were generated above in the first step.

To produce the actual contours, we interpolate across the noise source in two dimensions. This is the same technique used in Perlin noise (and many examples and projects from this blog).

// map a pseudorandom number to a point

function randm(x, y) {

return Math.sin(a0 * Math.sin(x * a1 + a0) + a1 * Math.cos(y * a0 + a1));

}

// interpolate a color value for a point

function source(x, y) {

var mx = x;

var my = y;

var ix = Math.floor(mx);

var iy = Math.floor(my);

mx = mx - ix;

my = my - iy;

var dx0 = (1 - mx) * randm(ix, iy) + mx * randm(ix + 1, iy);

var dx1 = (1 - mx) * randm(ix, iy + 1) + mx * randm(ix + 1, iy + 1);

return (1 - my) * dx0 + my * dx1;

}

To actually generate the contour plot, we get the value of the source function at each (normalized) point in the image buffer. Eight distinct regions are created by rounding the value to its nearest one-eighth. The boundary between each region is where the fractional part of the source (times eight) is near zero, and we run that value through a power function to shape it into a thin pencil line.

// for each point in the buffer

for (var x = 0; x < width; x++) {

for (var z = 0; z < height; z++) {

var xx = 2 * (x / width - 0.5);

var zz = 2 * (z / height - 0.5);

var k = (source(xx, zz) + 1) * 0.5;

var l = Math.floor(k * 8) / 8;

var m = k * 8;

m = m - Math.floor(m);

m = Math.pow(Math.sin(Math.PI * m), 0.1);

interp(x, z, l, m);

}

}

The completed image is drawn to the canvas. If the browser window is resized, we need to match the canvas width to the header width, and redraw the image to insure it’s fully visible.

context.putImageData(image, 0, 0);

window.onresize = function(event) {

canvas.width = document.body.clientWidth;

context.putImageData(image, 0, 0);

};

})();

It would be possible, of course, to animate the image. The source function is defined for all real numbers, so I could scroll it infinitely in one direction or another. Eye-catching? Certainly. Missing the point? Maybe. To paraphrase a T-shirt: hey, my content is down here.