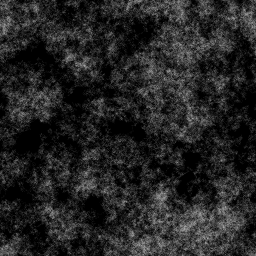

I like a bit of structured noise. Photoshop (and the GIMP) call it difference clouds, but to me it’s always been drunkwalk, because that’s the algorithm I use to make it. For past games, I wrote a C program to iterate random steps across an array and place the results in an image file, like so.

I’d load that image from the server and hand it off to WebGL as a texture, doing a little Perlinization in the fragment shader to colorize and stretch it. However, what I really wanted was client-side generation. I wanted to make them on the fly and use them for a load of other purposes. Heightmaps, for example. There’s a smoothness to drunkwalk noise that just pulling random numbers out of the air doesn’t get you. You’ve got to add that stuff up.

So I finally added a drunkwalk noise generator to my toolkit.

walk: function (img, seed, reps, blend, c, p0, p1, p2, p3) {

var w = img.width;

var h = img.height;

var il = Math.round(w * h * reps);

var rng = this.rng;

var dt = img.data;

var dnelb = 1 - blend;

var x, y, i, j;

rng.reseed(seed);

x = Math.floor(rng.get(0, w));

y = Math.floor(rng.get(0, h));

for (i = 0; i < il; i++) {

j = x + w * y;

dt[j] = Math.round(dt[j] * dnelb + c * blend);

if (rng.get() < p0) {

x++;

if (x >= w) {

x = 0;

}

}

if (rng.get() < p1) {

y++;

if (y >= h) {

y = 0;

}

}

if (rng.get() < p2) {

x--;

if (x < 0) {

x = w - 1;

}

}

if (rng.get() < p3) {

y--;

if (y < 0) {

y = h - 1;

}

}

}

}

To generate a typical chunk of structured noise, you’d pass parameters like this.

walk(tex, seed, 8, 0.02, 255, 0.5, 0.5, 0.5, 0.5);

We assume the tex object resembles a native ImageData object in having height, width, and data properties. However, the data format is a single byte luminance channel (as opposed to the four-byte RGBA format). You can still pass this right into WebGL as a texture, and colorize it in the shader.

You can pass a custom seed, which will generate the same pattern each time, or a zero to select a random pattern. (The rng object is a static RNG handing out numbers from zero to one.)

The reps parameter is the most important. Good patterns require a large number of steps, and the larger the size of the array, the more steps you need. Thus, I specify the number of steps as a multiple of the area.

The blend parameter works like an alpha-blending value, determining how much each step will blend between the intensity present in the channel and the intensity given by the c parameter. In this case, I’m blending between 0 and 255.

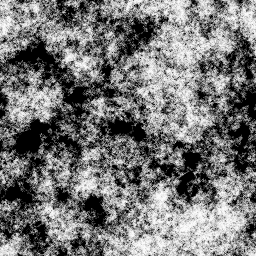

The p0-p3 values let you alter the probability that your “drunk” will stumble in one direction or another on each step. Changing the probabilities alters the structure of the resulting image.

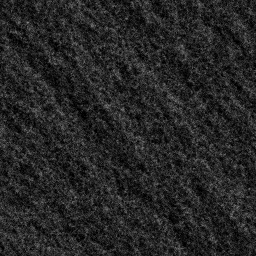

Performance isn’t bad, thanks to JIT compilation. I can generate about ten 64×64 images (with a reps value of 8) without noticing any slowdown on a 2GHz AMD64.

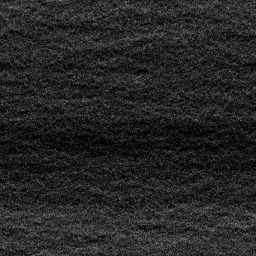

You may note that the algorithm enforces boundary conditions in an odd way. If a step takes us off the “side” of the array (i.e., x > width), we resume on the opposite side (x == 0). This creates a natural tiling effect that makes texturing a breeze.

Nice post. However, could I recommend that you re-post the images using PNG, rather than JPEG? JPEG’s compression algorithm will really degrade these images, since they have so many high-frequency components. I can see the edges of the compression blocks, quite clearly, in these images. Since PNG is lossless, it won’t have the same problem.

Also, have you tried using an angle for direction, rather than up/down/left/right? Maybe use one random sample for the angle, and another for the distance. With two coefficients, you could experiment with different looks. Often I find that when I write a graphics algorithm like this, using up/down/left/right, that the rectangular-ness of these directions affects the outcome, sometimes in good ways and sometimes bad.

Good point on the JPEG issue. I will repost as PNG soon.

Angle-based walking, yeah, I could see that. I might bang out something this week and see how it looks. I’ll post any results. Thanks!

Actually, I think I was partly wrong. JPEG is definitely a problem, but it’s not what is causing the blocks that I see. I tried running your code locally, and I see some sort of periodic effect (a grid), with a horizontal and vertical period of about 22 pixels. (JPEG blocks are 8×8.) Looking at your PNG images, I still see the same thing. What’s up with that?

The effect is most prominent in drunkwp6.png and drunkwp9.png. Do you see it, too?

I might convert this code to something other than JS, and see if the same effect occurs. I’m assuming you’re using Canvas and createImageData() — is that right?

Hm. I’ve blown up the original images and I’m afraid I’m not seeing this periodic effect. Doesn’t mean it’s not there, of course, I’m just not tuned into it.

My offhand guess is that it could have something to do with my random number generator, which is hand-rolled (I needed a seedable RNG). It’s the standard linear congruential “good enough” algorithm but it can produce artifacts for large data sets.

Instead of using createImageData, I create a custom object that resembles an ImageData object but has a single-byte luminance channel rather than the four-byte RGBA format. The relevant code is at https://github.com/wordsaretoys/soar/blob/master/pattern.js in the make() method.

/facepalm I figured it out. Local brain damage. Somehow, this browser’s zoom level got set to 105%. (?!?) So it’s an artifact of the *upsampling* being done by the browser. I only realized this after loading these images on a different machine. I ran my own random-walk code locally, in the browser, and so I saw the exact same artifacts, even with code that I was writing, so I thought it was a real effect.

Meanwhile, I did try the “directional” random walk. It doesn’t seem markedly different. However, I did find that experimenting with the “stagger” distance had some interesting effects. Setting it to 1.0 gave something close to your results. Setting it to 0.5 (very short stagger) gave very extreme, high-contrast results. As the stagger distance increases, the whole image tends toward a uniform gray, which should be expected.

Also, I did something a little different. Rather than quantizing x and y every time you stagger, I kept the fractional parts, and I only quantized when converting to the pixel location. Also, it took me a second to realize that using ImageData meant that I had 4 values per pixel, rather than one.

The code’s a bit ugly; I was just mashing on a few different ideas.

function drunkwalk(img, seed, reps, blend, c) {

var w = img.width;

var h = img.height;

var il = Math.round(w * h * reps);

// var rng = rng;

var dt = img.data;

var dnelb = 1 – blend;

var x, y, i, j;

var R = 0.5; // how far to stagger

var pi2 = 3.1415926535 * 2.0; // range of angles

var dir;

var dist = R;

rng.reseed(seed);

x = rng.get(0, w);

y = rng.get(0, h);

var sign = 0.0;

var pixelBytes = w * h * 4; // 4 for RGBA

// fill everything with [0, 0, 0, 255]

for (i = 0; i < pixelBytes; i += 4) {

dt[i] = 0; // R

dt[i + 1] = 0; // G

dt[i + 2] = 0; // B

dt[i + 3] = 255; // A

}

var pixel;

for (i = 0; i < il; i++) {

j = Math.floor(x) + w * Math.floor(y);

j *= 4; // Operate only on R channel; *4 for RGBA, no addition for R channel

j = j % pixelBytes;

dt[j] = Math.round(dt[j] * dnelb + c * blend);

dir = rng.get() * pi2;

x += Math.cos(dir) * dist;

y += Math.sin(dir) * dist;

}

var cv = document.getElementById(“noiseCanvas”);

var ctx = cv.getContext(“2d”);

var noiseData = ctx.createImageData(300, 300);

drunkwalk(noiseData, 1, 20, 0.08, 255);

ctx.putImageData(noiseData, 10, 10);

Also, I wonder what it would be like to call this 3 times, one for each R/G/B channel… Going to try that now.

Ah, that’s a relief, then!

I went ahead and adapted your code into my pattern library as “anglewalk”. I like the effects you get where length is between 1 and 4, a nice diffuse cloudy effect. I think I can use that somewhere.